Centralized AWS observability part 1

Reading time: 5 minutes and 43 seconds

Enforcing AWS AMI images with OPA

Reading time: 3 minutes and 3 seconds

In the part 2, we provided examples of how you can enforce AWS tags to ensure compliance and governance within your organization. In this post, we will extend those concepts by demonstrating how you can enforce specific AMI IDs using OPA policies, helping you maintain control over the AMI images used in your infrastructure.

It is critical for all organizations to establish a robust AMI building process to ensure that operating systems are consistently patched for security vulnerabilities. By maintaining control over the AMI lifecycle, organizations can enforce compliance, reduce exposure to risks, and ensure that their infrastructure remains secure and up-to-date. This process not only helps in mitigating potential threats but also aligns with best practices for infrastructure management and governance. This process is typically managed by the Security team, who are responsible for building and maintaining approved AMIs. The “customers” of these AMIs are other teams within the organization, such as Development, Operations, or QA, who rely on these pre-approved images to ensure their workloads meet security and compliance standards.

Enforcing AWS Resource Tags with OPA

Reading time: 7 minutes and 15 seconds

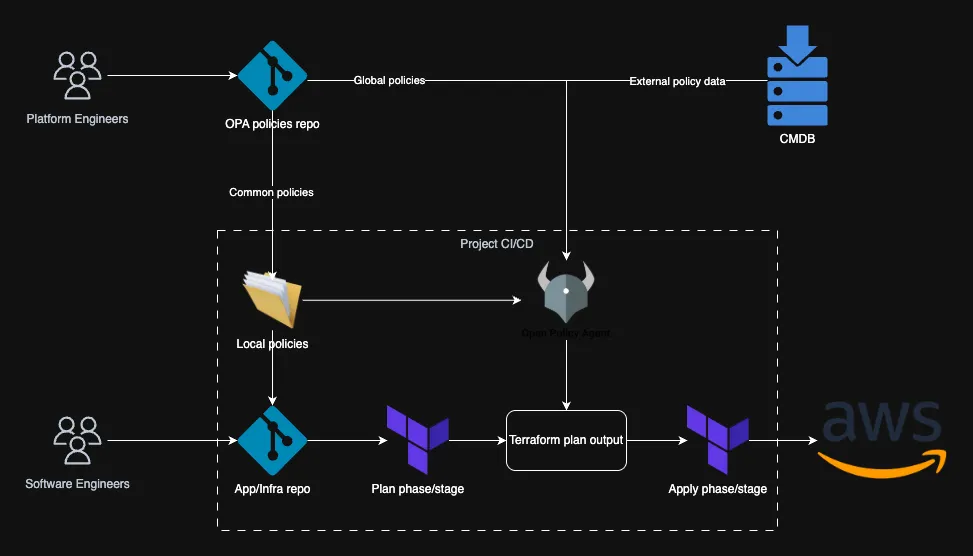

In Part 1 of this series, we covered the basics of Policy as Code and how the shift-left approach helps catch infrastructure mistakes early. In this post, we’re putting theory into practice—specifically, how to enforce AWS tagging strategy using Open Policy Agent (OPA) during Terraform plan phase/stage.

This isn’t just about tagging hygiene. Good tagging is foundational for tracking cloud costs, understanding ownership, and avoiding dangerous or expensive mistakes.

Why Tags Matter

Tags seem trivial—until you’re staring at a $1M AWS bill and have no idea where it came from.

Static site with S3 and CloudFront

Reading time: 4 minutes and 54 seconds

First policy as code using OPA

Reading time: 5 minutes and 1 seconds

As I mentioned in my previous blog post, my first OPA policy was just to catch one simple parameter, if we have in the S3 Terrafom module set the force_destroy = true.

Here’s a simple OPA policy that will catch this dangerous configuration:

package terraform.plan

deny[msg] {

# Find all resources in the plan

resource := input.resource_changes[_]

# Check if it's an S3 bucket

resource.type == "aws_s3_bucket"

# Look for force_destroy in the configuration

resource.change.after.force_destroy == true

msg := sprintf("S3 bucket '%s' has force_destroy set to true. This is dangerous as it allows bucket deletion even when not empty.", [resource.address])

}

This policy works by:

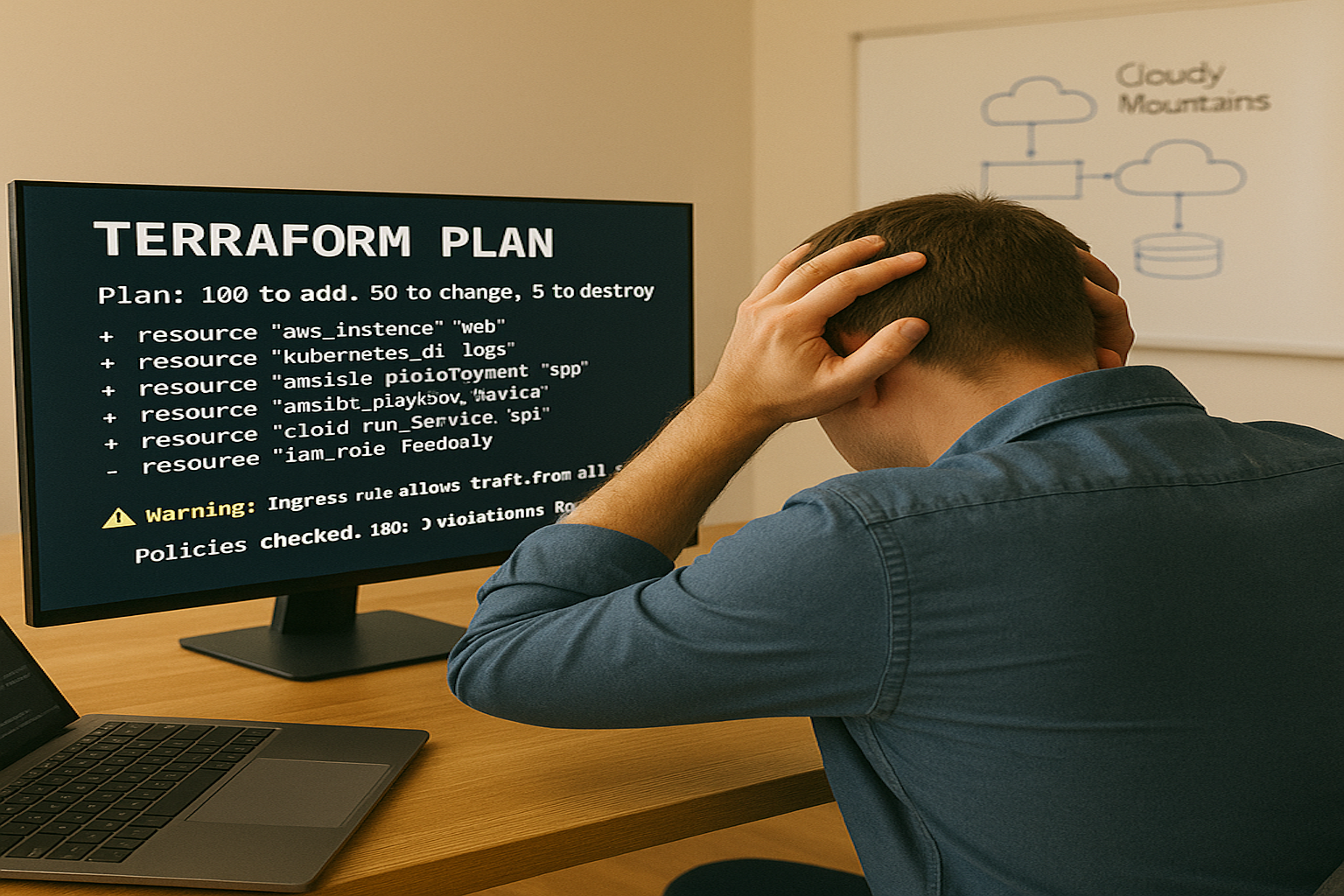

How I Learned the Hard Way That Terraform Needs Policy as Code

Reading time: 3 minutes and 14 seconds